Scrape newsletters and email them using Python, Dropbox and IFTTT

Our local school sends out a weekly newsletter - but they put it on the school website as a PDF and then send a link in a text message..

But I hate trying to read PDFs on my phone - I much prefer to get an email with an attachment.

So here's my hack to identify new newsletters on the site, copy them into Dropbox, and send them to myself as an email attachment using IFTTT.

Python script

First, I wrote a script to copy newsletter PDFs from the school website into a Dropbox folder, and put it in /home/ubuntu/newsletters/sync.py

#!/usr/bin/python3

import os

import dropbox

import requests

import bs4

from datetime import date

def list_dropbox():

dropbox_access_token = os.environ['DROPBOX_ACCESS_TOKEN']

dbx = dropbox.Dropbox(dropbox_access_token)

return [ entry.name for entry in dbx.files_list_folder('').entries ]

def save_to_dropbox(prefix, path, url):

dropbox_access_token = os.environ['DROPBOX_ACCESS_TOKEN']

dbx = dropbox.Dropbox(dropbox_access_token)

dbx.files_save_url('/' + path, url)

def find_pdfs(url, prefix, filenames):

response = requests.get(url)

soup = bs4.BeautifulSoup(response.text, "html5lib")

for link in soup.find_all('a'):

if link.get('href').endswith('.pdf'):

filename = prefix + ' ' + str(date.today().year) + ' : ' + link.text + '.pdf'

filename = filename.replace('/','-')

if filename not in filenames:

save_to_dropbox(prefix, filename, link.get('href'))

print('saved ' + filename)

else:

print('already got ' + filename)

filenames = list_dropbox()

find_pdfs('http://www.my-local-school.com/stream/newsletters/full/1/-//', 'JUNIORS', filenames)

Note, you may need to install some of the libraries used here:

sudo apt-get install python3-pip

sudo pip3 install beautifulsoup4

sudo pip3 install dropboxHere's the explanation:

The list_dropbox() function gets a list of all the filenames that are already in my Dropbox folder, accessed with my DROPBOX_ACCESS_TOKEN (you'll need to get your own from your Dropbox account).

The save_to_dropbox() function will save a copy of a document at a specified URL into the Dropbox folder (again, as determined by the DROPBOX_ACCESS_TOKEN).

The find_pdfs() function does most of the work - it fetches the website page containing the newsletters, and uses the 'BeautifulSoup' parser to extract the anchor tags from the HTML.

Any anchor tag that ends in '.pdf' is assumed to be a newsletter PDF. A new filename is created based on the name of the PDF plus the date (and a prefix to avoid clashes if more than one site being scraped).

The new filename is then checked against the list of filenames already in Dropbox, so that the file can be ignored if we've already got it. If we haven't got it, we save the new file in Dropbox using save_to_dropbox().

Finally, 'https://www.my-local-school.com/stream/newsletters/full/1/-//' is the school website page where all the newsletters are posted.

Cron Job

We need to run the script at intervals to check the website for new content, so add a cron job:

01 * * * * . /etc/profile; /home/ubuntu/newsletters/sync.py > /tmp/newsletters.log 2>&1Note, the . /etc/profile is important in the cron job, as it loads the environment which will have the DROPBOX_ACCESS_TOKEN for accessing Dropbox - set it up something like this in /etc/profile:

export DROPBOX_ACCESS_TOKEN=UHJHYOzKfeferwAAAAUem_765cguygb7BWRc_vG0q2ldtjnm90tjscix2224nkIFTTT Service

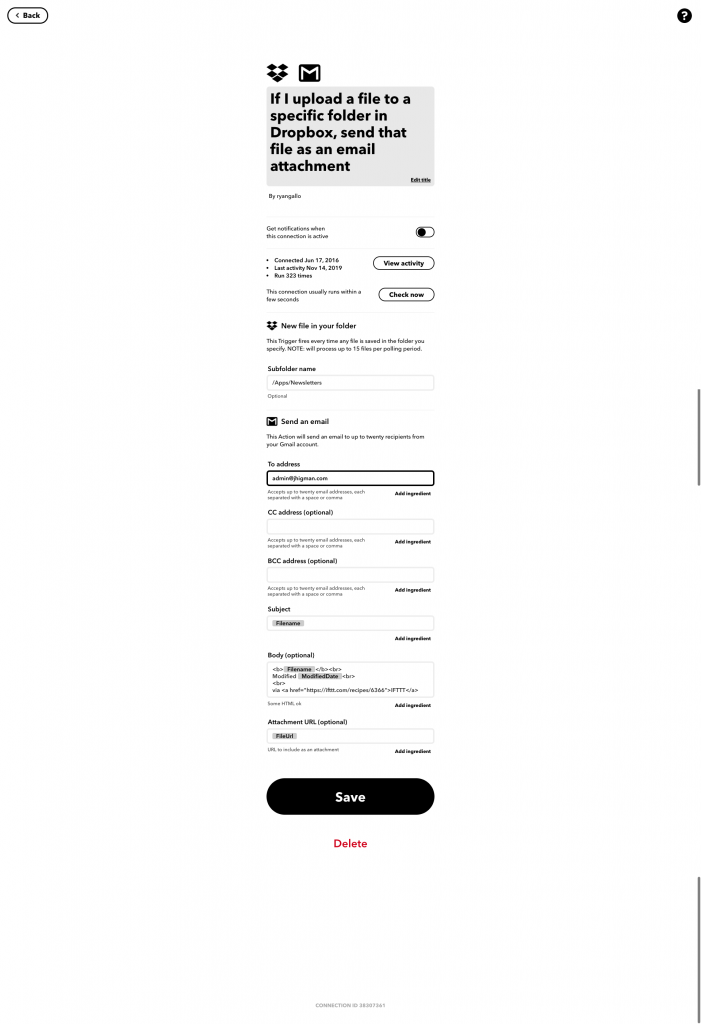

Next, setup an IFTTT service based on the "If I upload a file to a specific folder in Dropbox, send that file as an email attachment" template:

You'll need to give the IFTTT service permissions to access your Dropbox folder (/Apps/Newsletters) and your email service.

And that's it - the script will run every hour, copy any new newsletters from the school website to Dropbox, and IFTTT will email them to me as an attachment.

You can put the Python script and cron job on a server somewhere to have it running all the time.

Happy reading.